From Syscalls to Packets: How Code Reaches the Network Stack

Every network-aware program ends here in the wires. Understanding how user-space calls end up as packets on the line is how you trace malware C2 traffic, patch a socket-level implant, or debug a failed bind() that “should have worked.” This isn’t theory; this is the execution path your packets take in reality.

1. User-Space Application and System Calls

The process begins within a user-space application. When an application needs to send data over the network, it typically interacts with the operating system kernel through system calls. These system calls provide a standardized interface for applications to request services from the kernel, including network operations.

Common Network System Calls

Applications use specific system calls for network communication:

- socket(): This system call creates an endpoint for communication and returns a file descriptor. The type of socket (e.g., SOCK_STREAM for TCP, SOCK_DGRAM for UDP) and the protocol family (e.g., AF_INET for IPv4, AF_INET6 for IPv6) are specified here.

- bind(): (Optional, typically for server applications) This system call associates a local address and port with the socket.

- connect(): (For client applications) This system call establishes a connection to a remote socket. For connection-oriented protocols like TCP, this initiates the three-way handshake.

- send() / sendto() / write(): These system calls are used to transmit data over the socket. sendto() is typically used for connectionless protocols (UDP) as it specifies the destination address with each send operation. send() and write() are used for connected sockets.

- close(): This system call closes the socket, releasing its resources.

socket() for establishing a communication endpoint, bind() (primarily for servers) to assign a local address, connect() (for clients) to initiate a connection, send(), sendto(), or write() to transmit data, and close() to release socket resources. Different calls are used depending on the protocol type (e.g., SOCK_STREAM for TCP, SOCK_DGRAM for UDP) and connection status.

send() → sys_sendto() → sock_sendmsg() → tcp_sendmsg() → dev_queue_xmit()

Receiving Incoming Packets: From Wire to recv()

The journey of an incoming packet is the reverse of transmission, moving from the physical medium, through the hardware and kernel's network stack, finally reaching a waiting user-space application.

NIC Interrupts and NAPI

The process of receiving a packet typically begins at the Network Interface Card (NIC).

- NIC Reception: When a NIC receives a valid electrical, optical, or radio signal, it reconstructs the digital frame.

- Checksum Validation: The NIC often performs a hardware checksum validation (e.g., FCS/CRC for Ethernet) on the incoming frame.

- Interrupt Generation: Traditionally, upon successful reception and validation of a frame, the NIC raises a hardware interrupt to alert the CPU. The CPU then suspends its current task to execute an Interrupt Service Routine (ISR) associated with the NIC.

Modern high-speed networks generate a large number of interrupts, which can significantly burden the CPU. To mitigate this, the Linux kernel implemented NAPI (New API).

- NAPI's Role: NAPI is a hybrid interrupt/polling mechanism designed for efficient packet processing at high rates. Instead of generating an interrupt for every incoming packet, the NIC generates an interrupt only when new packets arrive after a period of inactivity.

- Polling Mode: Once the interrupt is handled, the NIC driver (now in "NAPI mode") switches to a polling model. It disables further interrupts from the NIC and actively polls the NIC's receive queues for a batch of packets. This allows the CPU to process multiple packets in a single context switch, reducing interrupt overhead.

- napi_gro_receive: Inside the NAPI polling loop, the driver calls functions like napi_gro_receive (for Generic Receive Offload) which can aggregate multiple smaller packets into a larger sk_buff before passing them up the stack, further reducing processing overhead for the upper layers.

De-encapsulation in the Kernel

As the sk_buff containing the incoming frame moves up the network stack, the kernel performs the reverse process of de-encapsulation, stripping off headers at each layer.

- Link Layer Processing:

- The NIC driver passes the sk_buff to the link layer.

- The link layer (e.g., Ethernet) removes the MAC header and FCS, validates the frame (if not already done by hardware), and identifies the EtherType to determine the next protocol (e.g., IP).

- Network Layer (IP) Processing:

- The sk_buff is then passed to the network layer (IP).

- The IP module removes the IP header, performs checksum validation (if not offloaded), checks the destination IP address, and handles any IP fragmentation if necessary (reassembling fragments into a complete IP packet).

- The Protocol Number in the IP header indicates the next layer protocol (e.g., TCP or UDP).

- Transport Layer (TCP/UDP) Processing:

- The sk_buff finally reaches the transport layer.

- UDP: For UDP, the UDP module removes the UDP header, performs checksum validation, and delivers the UDP datagram to the appropriate UDP socket based on destination port.

- TCP: For TCP, the TCP module removes the TCP header, processes sequence and acknowledgment numbers, performs checksum validation, handles reordering of segments, and acknowledges received data. It reassembles segments into the original data stream.

Socket Buffer Queues

After de-encapsulation at the transport layer, the incoming data (now an application-level message) needs to be delivered to the correct user-space application.

- Socket Lookup: The kernel uses the destination port number (and potentially the source IP/port for connected sockets) to identify the correct sock structure (and its associated sk_buff receive queue) that the data is destined for.

- sk_receive_queue: The processed sk_buff is then placed into the target socket's sk_receive_queue. This queue acts as a buffer for incoming data that has been received by the kernel but not yet read by the user-space application.

Waking the Process and recv()

For the user-space application to retrieve the data, it needs to be notified that data is available in its socket's receive queue.

- Process Blockage: Typically, an application makes a recv(), read(), or similar system call to read data from a socket. If no data is immediately available in the sk_receive_queue, the kernel blocks the calling process, putting it into a waiting state.

- Waking Up: When the kernel places a new sk_buff into the sk_receive_queue of a socket that has a blocked process waiting, the kernel wakes up that process.

- Data Copy: The now-woken process resumes execution. Its recv() (or read()) system call then copies the data from the sk_buff in the kernel's sk_receive_queue into the user-space buffer provided by the application.

- Return from System Call: Once the data is copied, the recv() call returns to the user-space application, providing the received data and the number of bytes read.

This completes the journey of an incoming packet, bringing data from the network wire all the way to the application that requested it.

Transition from User-Space to Kernel-Space

When an application invokes a system call (e.g., send()), the following sequence of events occurs:

- Software Interrupt (Trap): The system call instruction triggers a software interrupt or "trap," which transitions the CPU from user-mode to kernel-mode. This is a privileged operation.

- System Call Handler: The operating system's kernel has a system call table, which maps each system call number to a corresponding kernel function. The interrupt handler uses the system call number (passed as an argument) to locate and execute the appropriate kernel function.

- Argument Validation: Within the kernel, the arguments passed to the system call are validated to ensure they are valid and that the calling process has the necessary permissions.

2. The Kernel's Network Stack

Once data enters the kernel, it navigates the operating system's network stack, a sophisticate system of protocols and drivers that orchestrate network communication.

Socket Layer

The kernel's network stack begins at the socket layer, which manages the socket data structures created by the socket() system call.

- sock Structure: Each socket is represented in the kernel by a sock structure, which stores crucial details like the socket type, protocol, local and remote addresses, port numbers, and pointers to protocol-specific operations.

- sk_buff (Socket Buffer): Data from user applications is typically copied into an sk_buff structure. This fundamental data structure in the Linux kernel's networking subsystem is designed for efficient management of network packet data, including metadata such as packet length, protocol headers, and pointers for linking sk_buffs in queues.

The socket() system call creates the sock structure, which holds vital socket information. Network packet data from user applications is efficiently handled by the sk_buff (Socket Buffer) data structure. Tools like tcpdump, Wireshark, or kernel debuggers analyze these sk_buff structures when inspecting kernel memory or capturing packets.Software Queues Before the Hardware

Before data is transferred to the Network Interface Card (NIC) driver for physical transmission, several software queues within the kernel manage packet flow, enabling advanced traffic control and buffering.

- qdisc (Queuing Disciplines):

Situated between the IP layer and the NIC driver, the queuing discipline (qdisc) layer is critical for managing outgoing traffic. Here, the kernel employs various algorithms (e.g., Fair Queuing, Class-Based Queuing, fq_codel) to:

- Prioritize Traffic: Ensure high-priority packets (e.g., interactive SSH sessions) are sent before low-priority packets (e.g., bulk data transfers).

- Shape Traffic: Control the rate at which packets are sent to prevent network congestion.

- Buffer Packets: Temporarily hold packets when the network interface is busy, rather than immediately dropping them.

Tools like tc (traffic control) are used to configure and monitor these qdiscs, making them essential for Linux network management, performance tuning, and Quality of Service (QoS).

- The sock Structure and Its Queues: As noted, the sock structure is a central data structure representing an individual socket. Beyond general socket information, its role extends significantly to managing data flow:

- Protocol Function Pointers: The sock structure contains pointers to specific functions implemented by transport layer protocols (e.g., tcp_sendmsg, tcp_recvmsg for TCP; udp_sendmsg, udp_recvmsg for UDP). This allows the generic socket layer to seamlessly invoke the correct protocol-specific logic for sending and receiving data without needing to know the underlying protocol details.

- Send Queue (sk_send_queue): This queue buffers data written by the user-space application via send() or write() calls that has not yet been processed and sent by the transport layer (e.g., segmented by TCP) or handed down to lower network layers.

- Receive Queue (sk_receive_queue): This queue holds incoming data that has been fully de-encapsulated by the kernel's network stack and is awaiting retrieval by the user-space application via recv() or read() calls.

These software queues, particularly the qdisc layer and the sock structure's internal queues, are vital for efficiently buffering, prioritizing, and managing the flow of network traffic before it reaches the physical hardware for transmission.

Protocol Layers

The data then traverses the various protocol layers, each adding its own header information.

2.1. Transport Layer (TCP/UDP)

The transport layer is responsible for end-to-end communication between applications.

- TCP (Transmission Control Protocol): If the socket is a TCP socket, the TCP protocol module takes the data from the sk_buff and segments it into appropriate sizes (considering the Maximum Segment Size, MSS). It then adds a TCP header, which includes:

- Source and Destination Port Numbers

- Sequence Number

- Acknowledgement Number

- Window Size

- Checksum

- Flags (SYN, ACK, FIN, PSH, URG)

TCP also handles connection establishment (three-way handshake), flow control, congestion control, and reliable data transfer through acknowledgments and retransmissions.

Offload note: Modern NICs and kernels offload checksum calculation and segmentation (TSO/GSO). That means packet sizes you see in Wireshark might not match what the kernel actually queued.

- UDP (User Datagram Protocol): For UDP sockets, the UDP module adds a simpler UDP header, which includes:

- Source and Destination Port Numbers

- Length

- Checksum

UDP is connectionless and provides no guarantees of delivery, ordering, or duplicate protection.

Because UDP skips connection management, it’s the weapon of choice for beaconing malware, game servers, and DNS-based tunneling.

2.2. Network Layer (IP)

After the transport layer, the data (now a TCP segment or UDP datagram) moves to the network layer, primarily handled by the Internet Protocol (IP).

- IP Header Addition: The IP module encapsulates the transport layer segment/datagram into an IP packet. It adds an IP header, containing crucial information for routing across networks:

- Source and Destination IP Addresses

- Protocol Number (indicating TCP or UDP as the next layer)

- Time To Live (TTL)

- Header Checksum

- Fragment Offset and Flags (for IP fragmentation)

- Routing Decision: The IP layer consults the kernel's routing table to determine the next hop for the packet. Based on the destination IP address, it decides which network interface the packet should be sent through.

ARP (Address Resolution Protocol): If the destination is on the local network (i.e., directly reachable without a router), and the MAC address of the destination host is not in the ARP cache, the ARP module will initiate an ARP request to discover the MAC address associated with the destination IP address.

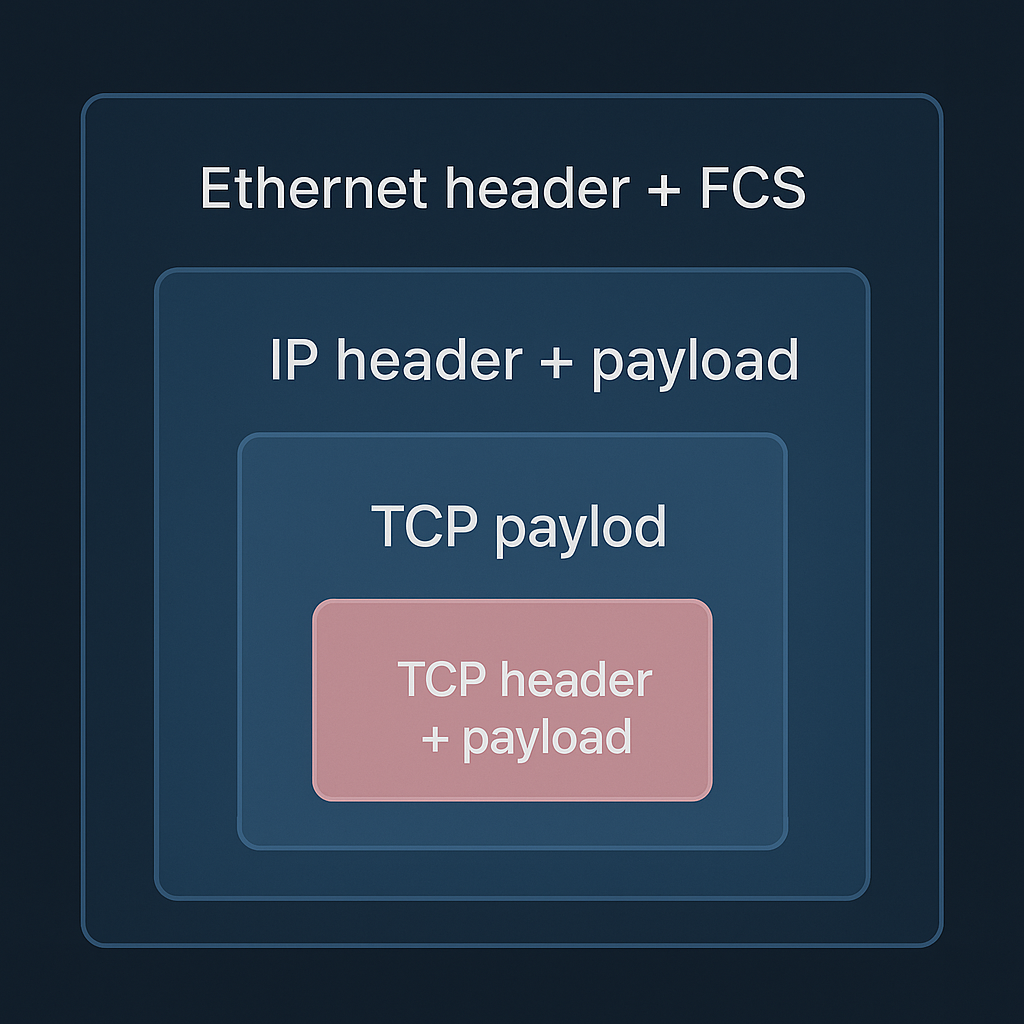

2.3. Link Layer (Ethernet, Wi-Fi, etc.)

The final software layer before the hardware is the link layer, often Ethernet or Wi-Fi.

- MAC Header Addition: The link layer encapsulates the IP packet into a frame (e.g., an Ethernet frame). It adds a link layer header, which includes:

- Source and Destination MAC Addresses

- EtherType (indicating IP as the next layer protocol)

- Checksum Calculation: A Frame Check Sequence (FCS) or Cyclic Redundancy Check (CRC) is appended to the end of the frame for error detection.

Network Interface Card (NIC) Driver

The link layer interacts with the specific network interface card (NIC) driver.

- Driver Software: The NIC driver is a piece of software that understands how to communicate with the specific network hardware. It receives the complete frame from the link layer.

- DMA (Direct Memory Access): Modern NICs often use DMA to transfer data directly from kernel memory (where the sk_buff resides) to the NIC's internal buffer without involving the CPU. This significantly reduces CPU overhead.

- Hardware Queues: The NIC has internal transmit queues where the driver places frames awaiting transmission.

3. Hardware and Physical Transmission

Finally, the data reaches the physical hardware.

Network Interface Card (NIC)

The NIC is responsible for the actual electrical or optical transmission of the data.

- Framing and Encoding: The NIC takes the digital frame from its transmit buffer and performs further processing:

- Preamble and Start Frame Delimiter (SFD): For Ethernet, a preamble and SFD are added to the beginning of the frame to synchronize the receiving device.

- Encoding: The digital bits are converted into an analog signal suitable for the transmission medium (e.g., electrical pulses for copper cable, light pulses for fiber optic, radio waves for Wi-Fi).

- Collision Detection/Avoidance: For shared media like older Ethernet or Wi-Fi, the NIC handles collision detection (CSMA/CD) or collision avoidance (CSMA/CA).

Physical Medium

The encoded signal is then sent over the physical transmission medium:

- Copper Cable (Ethernet): Electrical signals travel over twisted-pair cables.

- Fiber Optic Cable: Light pulses travel through optical fibers.

- Wireless (Wi-Fi): Radio waves propagate through the air.

Packet Transmission

Once the signal is on the wire (or air), it travels to its destination, potentially passing through various network devices like switches and routers. Each hop involves similar processes of receiving, processing, and retransmitting the packet at different layers.

How Common Tools Intercept the Flow

Understanding where various network tools fit into this process is crucial for effective debugging and analysis:

- System Call Tracing (e.g., strace, ltrace): These tools operate at the user-space to kernel-space transition, explicitly showing the system calls an application makes (e.g., socket(), bind(), sendto()). They reveal the initial intent of the program regarding network communication.

- Socket Statistics (e.g., ss, netstat): These utilities inspect the kernel's sock structures. They provide real-time information about the state of network sockets (e.g., LISTEN, ESTABLISHED, CLOSE_WAIT), including local and remote addresses, port numbers, and process IDs.

- Packet Capturing (e.g., tcpdump, Wireshark): These tools typically hook into the link layer, capturing a copy of the sk_buff structures just before they are handed to the NIC driver for transmission or immediately after reception. This allows for detailed inspection of network headers and payloads as they appear on the wire.

- Traffic Control (e.g., tc): The tc command directly interacts with the kernel's queuing disciplines (qdiscs) layer. It's used to configure, monitor, and manage the software queues, enabling prioritization, shaping, and buffering of outgoing network traffic.

- Kernel Tracing (e.g., bpftrace, perf): For the deepest insights, these advanced tools can trace specific function calls within the kernel's network stack (e.g., tcp_sendmsg(), dev_queue_xmit(), napi_gro_receive()). They provide granular details on packet processing, performance bottlenecks, and the precise execution path of data through the kernel.

Where Firewalls Fit In

A significant missing piece in this detailed journey is the role of firewalls. The Linux firewall framework, Netfilter, is deeply integrated into the kernel's network stack, placing "hooks" at various strategic points. These hooks allow firewall tools like iptables and nftables to inspect, modify, or drop packets as they traverse the stack, providing critical security and traffic management capabilities. Understanding these hook points adds immense value for debugging, securing, and analyzing network traffic.

Here's how Netfilter hooks interact with the packet flow:

For Outgoing Packets (Local Process Generating Traffic):

- NF_IP_LOCAL_OUT: This hook is positioned in the IP layer, after the routing decision has been made, but before the packet is passed down to the link layer. This is the primary point where rules in the OUTPUT chain of iptables/nftables are processed. It allows filtering of packets originating from the local machine and destined for either local delivery or forwarding to other networks.

- NF_IP_POST_ROUTING: This hook is located just before the packet is handed to the NIC driver for physical transmission (conceptually before dev_queue_xmit()). This is a crucial point for operations like Source Network Address Translation (SNAT), where the source IP address of an outgoing packet is modified, often to present a consistent public IP address from a private network. Rules in the POSTROUTING chain are processed here.

For Incoming Packets (Destined for Local Process):

- NF_IP_PRE_ROUTING: This is the very first hook for incoming packets, positioned immediately after the NIC driver receives the packet and before any initial routing decisions are made at the IP layer. This is where rules in the PREROUTING chain are processed, and it's commonly used for Destination Network Address Translation (DNAT), allowing redirection of incoming traffic to different internal IP addresses and ports (e.g., port forwarding).

- NF_IP_LOCAL_IN: This hook is triggered in the IP layer after the routing decision has determined that the incoming packet is destined for a local process. It's where rules in the INPUT chain are applied, allowing filtering of traffic specifically targeting services running on the local machine.

For Forwarded Packets (Passing Through the Machine):

- NF_IP_FORWARD: This hook is located in the IP layer, after the routing decision has determined the packet should be forwarded to another interface, but before it hits the NF_IP_POST_ROUTING hook for its onward journey. Rules in the FORWARD chain are processed here, enabling the machine to act as a router or firewall for traffic between different networks.

By strategically placing these hooks, Netfilter provides granular control over the packet's journey, enabling comprehensive firewalling, NAT, and traffic manipulation capabilities at various stages of the network stack. These points are essential for understanding how network security policies are enforced at the operating system level.

Modern High-Performance Networking (Kernel Bypass)

While the traditional kernel network stack provides robust and flexible communication, certain high-performance applications demand extremely low latency and high throughput that the kernel's overhead can impede. To address these needs, several "kernel bypass" technologies have emerged.

- DPDK (Data Plane Development Kit): DPDK is a set of libraries and drivers for fast packet processing in user-space. It allows applications to directly access Network Interface Card (NIC) hardware, bypassing the kernel entirely for data plane operations. User-space applications utilize their own drivers to read and write packets directly from and to the NIC's buffers, eliminating the need for system calls and context switches, significantly reducing overhead. This is particularly popular in areas like network function virtualization (NFV), software-defined networking (SDN), and high-frequency trading.

- XDP (eXpress Data Path): XDP is a Linux kernel technology that enables the execution of user-defined eBPF (extended Berkeley Packet Filter) programs directly in the NIC driver, at the earliest possible point of packet reception. This allows for ultra-high-performance packet processing before the packet is allocated into an sk_buff and moves further up the traditional network stack. XDP is used for tasks such as DDoS mitigation, load balancing, firewalling, and custom packet filtering with minimal latency. It offers a significant performance boost for specific network functions by performing operations closer to the hardware.

These kernel bypass techniques represent a trade-off, often sacrificing some of the kernel's robust features and security for raw speed and efficiency, making them suitable for specialized high-performance environments.

The Journey from Syscall to Network Packet: A Detailed Overview

The process of sending data from a user-space application to the network involves a complex series of steps, each handled by different layers of the operating system and hardware. This journey can be broken down into the following stages:

- Application Data (User-Space): The data originates within a user-space application.

- send() / write() System Call (User-Space to Kernel-Space Transition): The application initiates a system call (e.g., send(), write()) to transfer data from user-space to the kernel, crossing the user-kernel boundary.

- Kernel Socket Layer (Manages sock structures, copies data to sk_buff): Within the kernel, the socket layer manages socket structures and copies the application data into an sk_buff (socket buffer) structure, which is a key data structure used for network packets in the Linux kernel.

- Transport Layer (TCP/UDP) (Adds TCP/UDP header, forming a segment/datagram): The transport layer (typically TCP or UDP) adds its respective header to the data, forming a segment (for TCP) or a datagram (for UDP). This header includes port numbers and other protocol-specific information.

- Network Layer (IP) (Adds IP header, forming a packet, performs routing): The network layer, primarily using the IP protocol, adds an IP header to the segment/datagram. This forms an IP packet, which contains source and destination IP addresses. At this stage, routing decisions are made to determine the next hop for the packet.

- Link Layer (Ethernet/Wi-Fi) (Adds MAC header and FCS, forming a frame): The link layer (e.g., Ethernet, Wi-Fi) adds a MAC (Media Access Control) header and a Frame Check Sequence (FCS) to the IP packet, creating a frame. The MAC header includes the physical addresses (MAC addresses) of the source and destination on the local network segment.

- NIC Driver (Transfers frame to NIC hardware, potentially via DMA): The Network Interface Card (NIC) driver is responsible for transferring the prepared frame from the kernel's memory to the NIC hardware. This often involves Direct Memory Access (DMA) for efficient data transfer without CPU intervention.

- Network Interface Card (NIC) (Encodes and transmits the signal physically): The NIC hardware takes the frame, encodes it into electrical, optical, or radio signals, and transmits it onto the physical medium.

- Physical Medium (Electrical, optical, or radio transmission): The encoded signal travels across the physical network medium (e.g., Ethernet cable, fiber optic cable, Wi-Fi radio waves) to its destination.

The intricate dance of data across a network is a testament to the seamless collaboration of distinct yet interdependent components. This complex, multi-layered process fundamentally demonstrates how application software, the operating system kernel, and network hardware meticulously orchestrate every facet of network communication.

At the highest level, application software initiates the request. Whether it's a web browser fetching a webpage, an email client sending a message, or a streaming service delivering media, the application generates the initial data and specifies its intended destination. It formulates the data into a format that it understands, but which then needs to be prepared for the underlying network. The application software essentially acts as the user's interface to the network, translating human intentions into digital commands.

Beneath the application layer lies the operating system kernel. This core component of the operating system is the central manager, responsible for allocating system resources, managing memory, and, crucially, handling input/output operations, which include network communication. When an application needs to send data over the network, it makes a system call to the kernel. The kernel then takes over, encapsulating the application data within various network protocols (like TCP/IP). It breaks down the data into smaller packets, adds header information containing source and destination addresses, and ensures the data is ready for transmission. The kernel also manages network interfaces, routing decisions, and handles incoming data, delivering it to the appropriate application.

Finally, the network hardware provides the physical infrastructure for data transmission. This includes components like network interface cards (NICs), cables (Ethernet, fiber optic), routers, switches, and wireless access points. The kernel hands off the prepared data packets to the NIC, which then converts the digital signals into electrical or optical signals that can travel across the physical medium. Routers direct traffic between different networks, while switches manage traffic within a local network. Each piece of hardware plays a critical role in moving the data packets from their source to their destination, interpreting the physical signals and forwarding them according to the addresses embedded by the operating system kernel.

This integrated approach, where each layer builds upon and relies on the one below it, is fundamental to the reliability and efficiency of modern network communication. Without this coordinated effort, from the user-facing application down to the physical cables and radio waves, the seamless exchange of information that we take for granted would simply not be possible.